AIVA

AIVA (A.I. Virtual Assistant): General-purpose virtual assistant for developers. http://kengz.me/aiva/

It is a bot-generalization: you can implement any features, use with major AI tools, deploy across platforms, and code in multiple languages.

| AIVA is | Details |

|---|---|

| general-purpose | An app interface, AI assistant, anything! |

| cross-platform | Deploy simultaneously on Slack, Telegram, Facebook, or any hubot adapters |

| multi-language | Cross-interaction among Node.js, Python, Ruby, etc. using SocketIO. |

| conversation-classifier | Using Wordvec on spaCy NLP. |

| hackable | It extends Hubot. Add your own modules! |

| powerful, easy to use | Check out setup and features |

To see what they mean, say you have a todo-list feature for AIVA, written in Node.js and leverages NLP and ML from Python. Set your todo list earlier from Slack on desktop? You can access it from Telegram or Facebook on mobile.

Deepdream in AIVA (checkout v3.2.1), only took a few hours on the Deepdream module, and deployed it in AIVA in just minutes. Runs on Fb and Telegram simulteneously:

We see people spending a lot of time building bots instead of focusing on what they want to do. It still requires much effort to just get a bot up and running. Moreover, the bot built is often confined to a single language, single platform, and without AI capabilities.

Why restrict when you can have all of it? Why build multiple bots when you can have one that plugs into all platforms and runs all languages?

AIVA exists to help with that - we do the heavy-lifting and build a ready-to-use bot; it is general purpose, multi-language, cross-platform, with robust design and tests, to suite your needs.

AIVA gives you powerful bot tools, saves you the time to build from scratch, and allows you to focus on what you want to do. Morever, you can build once, run everywhere with AIVA’s multi-adapter (Slack, Telegram, Fb).

Installation

1. Fork this repo so you can pull the new releases later:

2. Clone your forked repository:

git clone https://github.com/YOURUSERNAME/aiva.git

Setup

The line below runs bin/setup && bin/copy-config && npm install:

npm run setup

Then edit config/ files: default.json(development), production.json(production, optional), db.json(mysql)

The command installs the dependencies via bin/install && npm install, and prepare the database for aiva to run on. The dependencies are minimal: nodejs>=6, python3, and mysql.

See bin/install for the full list, and customize your own. This also runs the same sequence as the CircleCI build in circle.yml.

Docker. We also offer a Docker image kengz/aiva. It runs the same except with an extra layer of Docker. See Docker installation for more.

Run

npm start # runs 'aivadev' in development mode

# Add flags for more modes

npm start --debug # activate debug logger

npm start production # runs 'aiva' in production mode

See Commands for more. This will start AIVA with the default hubot adapters: Slack, Telegram, Facebook (only if activated). See Adapters for connecting to different chat platforms.

AIVA saying hi, translating, running deep neural net; on Slack, Telegram, Facebook:

Check Setup tips for help.

Features

This gives a top level overview of the features. See Modules to find usable functions for developers.

| Feature | Implemented? |

|---|---|

| fast setup | yes |

| docker | yes |

| cross-platform | yes |

| customMessage | pending |

| graph brain | pending |

| multi-language | yes |

| conversation classifier | yes |

| AI tools | yes |

| theoretical power | yes |

fast setup

3 steps to get a bot installed and running. It once took me just 15 seconds to deploy a bot for an unexpected demo.

P/S “fast” implies the least number of steps; dependency installation can be heavy and long.

Docker

Complex projects tend to be difficult to install and distribute. Docker has become a preferred solution, especially for heavy machine learning projects. Docker also provides a fast and safe development environment through containerization.

docker pull kengz/aiva

AIVA is quite heavy on dependencies, understandably. So we’ve add an AIVA docker image kengz/aiva since v3.2.0, and development is seamless with it. See Docker installation.

cross-platform

The Facebook bot paradigm is “why use multiple apps when you can access them from one bot?”. We dare to ask “why talk to the bot from one platform instead of everywhere?” With AIVA we can build once, run everywhere.

Omnipresence

This inspires the omnipresence feature: a bot that recognizes who you are, and can continue conversation in any supported platforms. We talk to our friends everywhere, and they don’t forget us when we switch apps. Bots should do the same too.

In short: one brain, multiple interfaces. AIVA’s hubot base allows for generality, and it can tap into multiple platforms by using different adapters. Unlike the original hubot though, she can exist simultaneously on multiple platforms by having several interface instances plugged into the respective adapters, and all share the same brain, which serves as the central memory.

See adapters for more.

customMessage

Sending plain texts to different adapters is straightforward. However, sometimes we wish to take advantage of the available custom formatting of a platform, such as Slack attachment or Facebook rich messages.

These special messages can be sent via robot.adapter.customMessage(attachments) - a method that most adapters implement (Slack, Telegram, Facebook adapters have it). All we need to do is to format the attachments properly by detecting the current adapter using robot.adapterName, then format the attachments to the right format with customFormat.

See adapters for more.

graph brain

The brain of AIVA is a graph database; it is the central memory that coordinates with different adapters, allowing it to be consistent across the platforms; for example, it can remember who you are or your todo list, even as you switch apps.

Graph is a very generic and natural way of encoding information, especially for information that doesn’t always follow a fixed schema. A knowledge base is often implemented as a graph (wordNet, conceptNet, google graph, facebook graph, etc.) due to the adhoc and connected nature of generic knowledge. Thus, we think it’s the natural choice for AIVA.

Although graph and non-graph databases can both be Turing-complete, graphs tend to have a lower working complexity in practice, and it’s data units can be schema-free. Turing completeness ensures that the database can do everything a computer supposedly can; lower complexity makes development easier, schema-free allows knowledge creation on-the-fly.

multi-language

Unite we stand. When different languages work together, we can access a much larger set of development tools. Also, we wish that a developer can use the AIVA regardless of his/her favorite programming language.

For more, see Polyglot environment and Socket.io clients for how it’s done. We now support node.js, python, ruby.

conversation classifier

AIVA has a conversation classifier that uses wordvec from spaCy. It is pretty new, and is undergoing refinement. See lib/py/convo_classifier.py for the backend logic, and scripts/convo.js for the interface script. Define your convo classes, its queries and responses in data/convo_classes.json.

AI tools

AIVA can be used with a set of well-rounded set AI/machine learning tools - each is the most advanced of its type. The recommendations below saves you the tideous hunt, installation, and setup.

Thanks to the multi-language feature, we can easily access the machine learning universe of Python. For example, lib/py/ais/ contains a sample Tensorflow training script dnn_titanic_train.py, and a script to deploy that DNN for usage dnn_titanic_deploy.py. It is interfaced through scripts/dnn_titanic.js that allows you to use run predictions.

The list of AI modules and their respective language:

| AI/ML | type | lang |

|---|---|---|

| Tensorflow | Neural Nets | Python |

| scikit-learn | Generic ML algorithms | Python |

| SkFlow | Tensorflow + Scikit-learn | Python |

| spaCy | NLP | Python |

| Wit.ai | NLP for developers | Node.js client |

| Indico.io | text+image analysis | (REST API) Node.js client |

| IBM Watson | cognitive computing | Node.js client |

| Google API | multiple | Node.js client |

| ConceptNet | semantic network | (REST API) Node.js interface |

| NodeNatural | NLP | Node.js |

| wordpos | WordNet POS | Node.js |

| date.js | time-parsing | Node.js |

These tools are put close together in a polyglot environment, so you can start combining them in brand new ways. An example is the generic NLP parser for parsing user input into intent and functional arguments.

theoretical power

AIVA is based on a theoretical interface HTMI and a brain CGKB that is human-bounded Turing complete. The theorem establishes that HTMI can be used by a human to solve any problems or perform any functions she enumerates that are solvable by a Turing Machine. Complete implementation is still underway.

Why is this important? Because for far too many times people have tried to solve problems that are unsolvable. Many of the bots out there are actually very restrictive. To allow developers to solve problems with full power, and with easy, we have built a general-purpose tool and made it theoretically complete, so you can focus on solving your problem.

Adapters

AIVA can simultaneously connect to multiple chat platforms and still behave as one entity. This allows you to build once, run everywhere.

It utilizes hubot’s adapters. So, feel free to add any as you wish. AIVA comes with Slack, Telegram, Fb adapters. See config/default.json at key ADAPTERS to activate or add more.

For each adapter, we encourage you to create two tokens, for production and development respectively. The credentials go into config/default.json(development), and config/production.json.

The webhooks for adapters are auto-set in ngrok in src/aiva.js; you don’t even need to provide a webhook url.

As mentioned, we can use the custom message formatting of a platform, such as Slack attachment or Facebook rich messages. You can check the adapter by robot.adapterName to format message accordingly, then send it using the method robot.adapter.customMessage for any adapter. More below.

Slack

hubot-slack comes with AIVA. It uses Slack’s RTC API. Create your bot and get the token here.

To use customMessage, see the example (pending update), invoked when robot.adapterName == 'slack'. Refer to the Slack attachments for formatting.

Telegram

hubot-telegram comes with AIVA. Create your bot and get the token here.

To use customMessage, see the example, invoked when robot.adapterName == 'telegram'. Refer to Telegram bot API for formatting.

hubot-fb comes with AIVA. Create your bot by creating a Fb App and Page, as detailed here. Set the FB_PAGE_ID, FB_APP_ID, FB_APP_SECRET, FB_PAGE_TOKEN as explained in the adapter’s page hubot-fb.

To use customMessage, see the examples, invoked when robot.adapterName == 'fb'. Refer to the Send API for formatting.

Development Guide

AIVA is created as an A.I. general purpose interface for developers. You can implement any features, use it simultaneously on the major platforms, and code in multiple languages. This solves the problem that many bots out there are are too specific, often bounded to one chat platform, and can only be developed in one language.

Since it is a generic interface, you can focus on writing your app/module. When done, plugging it into AIVA shall be way more trivial than writing a whole app with a MEAN stack or Rails to serve it.

Production and Development

Per common practice, we distinguish between production and development version using NODE_ENV environment variables. So we generate two sets of keys for two bots:

Commands

All the commands are coded through package.json and can be ran from npm:

npm start # run development mode 'aivadev'

npm start --debug # activate debug logger

npm start production # runs production mode 'aiva'

npm stop # stop the bots

npm test # run unit tests

forever list # see the list of bots running

Docker

All the commands/scripts are compatible for use with/without Docker. The Docker image syncs the repo volume, so you can edit the source code and run the terminal commands as usual. The shell will enter a Docker container and run the same thing as it would on a local machine, so you can barely feel the difference when developing.

For Dockers, there are 2 containers: aiva-production and aiva-development, which provide good isolation. You can develop safely in parallel without needing to take down your deployed version.

See Dockerization for how AIVA is packaged into Docker.

Everything runs pretty much the same with Docker, except for a layer of wrapped abstractions. There are some extra commands for Docker:

npm run enter # enter a parallel bash session in the Docker container

npm run reset # stop and remove the container

Custom Dependencies

The Docker containers on start will auto install any new dependencies specified in the right config files. They’re listed in Project Dependencies.

Polyglot Environment

Unite we stand. Each language has its strengths, for example Python for machine learning, Node.js for web. With a built in Socket.io client logic, AIVA allows you to write in multiple coordinating languages.

For now we have /lib/client.{js, py, rb}. Feel free to add Socket.io client for more languages through pull request!

For quick multilingual dev, you can start the polyglot server at

src/start-io.jswith:

# shell: start the polyglot server

npm run server

then import a

lib/client.jsto test a local feature from thejsinterface. Example: the snippet at the top ofscripts/translate.jsquickly tests the translate function inpython. Uncomment and run it.

// js: scripts/translate.js

const client = require('../client.js')

global.gPass = client.gPass

global.gPass({

input: "hola amigos",

to: 'nlp.py',

intent: 'translate'

}).then(console.log)

// hello friends

Polyglot Development

The quickest way to get into dev is to look at the examples in lib/<lang>/ and scripts/, which we will go over now.

Development comes down to:

- module: callable low level functions, lives in

/lib/<lang>/<module>.<lang>. - interface: high level user interface to call the module functions, lives in

/scripts/<interface>.js

The module can be written in any language if it has a Socket.io client. The interface is in js, and that’s pretty easy to write.

You write a module in <lang>, how do you call it from the interface? There are 3 cases depending on the number of <lang> (including js for interface) involved.

Case: 1 <lang>

<lang> = js. If your module is in js, just require it directly in the interface script.

Case: 2 <lang>s

e.g. <lang> = js, py.

1. You write a module lib/py/hello.py

2. Call it from the interface scripts/hello_py.js using the exposed global.gPass function, with the JSON msg

// js: scripts/hello_py.js

msg = {

input: 'Hello from user.', // input for module function

to: 'hello.py', // the target module

intent: 'sayHi' // the module function to call with input

// add more as needed

}

3. Ensure the called module function returns a reply JSON to the interface:

# py: lib/py/hello.py

reply = {

'output': foo(msg.get('input')), # output to interface

'to': msg.get('from'), # is 'client.js' for interface

'from': id, # 'hello.py'

'hash': msg.get('hash') # callback hash for interface

}

The JSON fields above are required for their purposes. global.gPass used by the interface will auto-inject and id for reply, and a hash to resolve the promise for the interface.

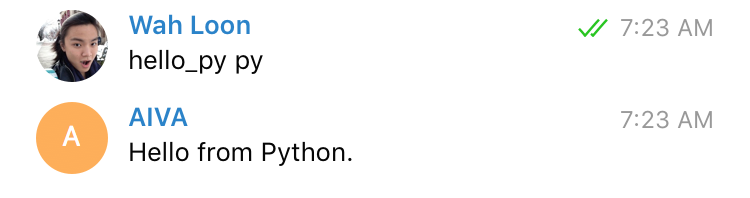

The hello_py feature calls Python; on Telegram:

Case: 3 <lang>s

e.g. <lang> = js, py, rb

1. You write modules in py, rb lib/py/hello_rb.py, lib/rb/Hello.rb

2. Call one (py in this example) from the interface scripts/hello_py_rb.js as described earlier.

3. lib/py/hello_rb.py passes it further to the rb module, by returning the JSON msg

# py: lib/py/hello_rb.py

reply = {

'input': 'Hello from Python from js.', # input for rb module function

'to': 'Hello.rb', # the target module

'intent': 'sayHi', # the module function to call with input

'from': msg.get('from'), # pass on 'client.js'

'hash': msg.get('hash'), # pass on callback hash for interface

}

4. lib/rb/Hello.rb ensure the final module function returns a reply JSON msg to the interface.

# rb: lib/rb/Hello.rb

reply = {

'output' => 'Hello from Ruby.', # output to interface

'to' => msg['from'], # 'client.js'

'from' => @@id, # 'Hello.rb'

'hash' => msg['hash'] # callback hash for interface

}

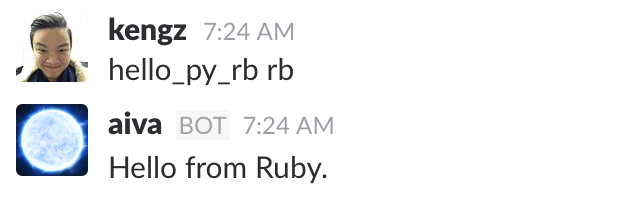

The hello_py_rb feature calls Python then Ruby; on Slack:

Dev pattern

With such pattern, you can chain multiple function calls that bounce among different <lang>. Example use case: retrieve data from Ruby on Rails app, pass to Java to run algorithms, then to Python for data analysis, then back to Node.js interface.

“Ma look! No hand(ler)s!”

“Do I really have to add a handler to reply a JSON msg for every function I call?”. That’s really cumbersome.

To streamline polyglot development further we’ve made the client.<lang>’s automatically try to compile a proper reply JSON msg, using the original msg it receives for invoking a function.

What this means is you can call a module (to) by its name, and its function (intent) by specifying the dotpath (if it’s nested), then providing a valid input format (single argument for now).

In fact, scripts/translate.js does just that. It uses Socket.io to call the lib/py/nlp.py, which imports lib/py/ais/ai_lib/translate.py

To test-run it, you can start the polyglot server at

src/start-io.jswith:

# shell: start the polyglot server

npm run server

Uncomment the snippet at the top of

scripts/translate.jsand run it.

// js: scripts/translate.js

const client = require('../client.js')

global.gPass = client.gPass

global.gPass({

input: "hola amigos",

to: 'nlp.py',

intent: 'translate'

}).then(console.log)

// hello friends

This calls

lib/py/nlp.pythat importslib/py/ais/ai_lib/translate.py, which returns the translated string instead of a reply JSON. The client will auto-compile a proper reply JSON msg for you.

# py: lib/py/ais/ai_lib/translate.py

def translate(source, to="en"):

return t.translate(source, from_lang="auto", to_lang=to)

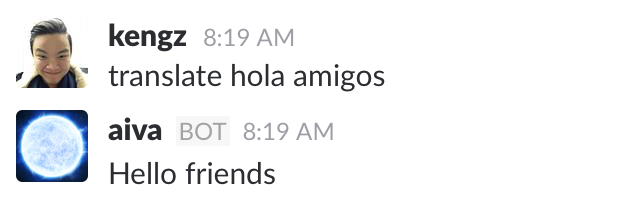

The translate feature calls Python that returns string that is auto-compiled into JSON msg by client.py; on Slack:

On receiving a msg, the client.<lang> tries to call the function by passing msg. If that throws an exception, it retries by passing msg.input. After the function executes and returns the result, client.<lang>’s handler will check if the reply is a valid JSON, and if not, will make it into one via correctJSON(reply, msg) by extracting the information needed from the received msg.

Lastly, after the js global.gPass sends out a msg, the final reply directed at it should contain an output field, as good dev pattern and reliability in promise-resolution. When global.gPass(msg) gets its reply and its promise resolved, you will see hasher.handle invoking cb in stdout.

Unit Tests

This repo includes only unit tests for js modules and interface scripts using mocha, and runs with npm test. Note that tests should be for systems, thus the tests for AI models are excluded.

For the module of other <lang>, you may add any unit testing framework of your choice.

How it works: Socket.io logic and standard

msg JSON keys for different purposes.

- to call a module’s function in

<lang>:scripts/hello_py.js

// js: scripts/hello_py.js

msg = {

input: 'Hello from user.', // input for module function

to: 'hello.py', // the target module

intent: 'sayHi' // the module function to call with input

// add more as needed

}

- to reply the payload to sender:

lib/py/hello.py

# py: lib/py/hello.py

reply = {

'output': foo(msg.get('input')), # output to interface

'to': msg.get('from'), # is 'client.js' for interface

'from': id, # 'hello.py'

'hash': msg.get('hash') # callback hash for interface

}

- to pass on payload to other module’s function:

lib/py/hello_rb.py

# py: lib/py/hello_rb.py

reply = {

'input': 'Hello from Python from js.', # input for rb module function

'to': 'Hello.rb', # the target module

'intent': 'sayHi', # the module function to call with input

'from': msg.get('from'), # pass on 'client.js'

'hash': msg.get('hash'), # pass on callback hash for interface

}

msg JSON keys

| key | details |

|---|---|

| input | input to the target module function. |

| to | filename of the target module in lib/<lang>. |

| intent | function of the target module to call. Call nested function with dot-path, e.g. nlp.translate. |

| output | output from the target function to reply with. |

| from | ID of the script sending the msg. |

| hash | auto-generated callback-promise hash from client.js to callback interface. |

Of course, add additional keys to the JSON as needed by your function.

Server

There is a socket.io server that extends Hubot’s Express.js server: lib/io_server.js. All msgs go through it. For example, let msg.to = 'hello.py', msg.intent = 'sayHi'. The server splits this into module = 'hello', lang = 'py', modifies msg.to = module, then sends the msg to the client of lang.

For quick multilingual dev, you can start the polyglot server at

src/start-io.jswith:

# shell: start the polyglot server

npm run server

Clients

For each language, there is a socket.io client that imports all modules of its language within lib. When server sends a msg to it, the client’s handle will find the module and its function using msg.to, msg.intent respectively, then call the function with msg as the argument. If it gets a valid reply msg, it will pass it on to the server.

then import a

lib/client.jsto test a local feature from thejsinterface. Example: the snippet at the top ofscripts/translate.jsquickly tests the translate function inpython. Uncomment and run it.

// js: scripts/translate.js

const client = require('../client.js')

global.gPass = client.gPass

global.gPass({

input: "hola amigos",

to: 'nlp.py',

intent: 'translate'

}).then(console.log)

// hello friends

Note due to how a module is called using

msg.to, msg.intent, you must ensure that the functions are named properly, andRuby’s requirement that module be capitalized implies that you have to name the file with the same capitalization, e.g.lib/rb/Hello.rbfor theHellomodule.

We now support node.js, python, ruby.

Entry point

The entry point is always a js interface script, but luckily we have made it trivial for non-js developers to write it. A full reference is hubot scripting guide.

robot.respond takes a regex and a callback function, which executes when the regex matches the string the robot receives. res.send is the primary method we use to send a string to the user.

Overall, there are 2 ways to connect with lib modules:

global.gPass: scripts/hello_py.js This is a global method to pass a msg. It generates a hash using lib/hasher.js with a Promise, which is resolved whenever the js client receives a valid reply msg with same hash. This method returns the resolved Promise with that msg for chaining.

wrapped global.gPass: scripts/translate.js, lib/js/nlp.js This is similar to above, but the msg is properly generated by a js lib module, resulting in a much cleaner and safer interface script. The lib module needs to be imported at the top to be used.

Data flow

The msg goes through socket.io as

js(interface script)-> js(io_server.js)-> <lang>(client.<lang>)-> js(io_server.js)-> ...(can bounce among different <lang> modules)-> js(client.js)-> js(interface script)

For the hello_py.js example, the path is

js(scripts/hello_py.js) user input-> js(lib/io_server.js)-> py(client.py), call py function-> js(io_server.js)-> js(client.js) call Promise.resolve-> js(interface script) send back to user

Project directory structure

What goes where:

| Folder/File | Purpose |

|---|---|

| bin/ | Binary executables |

| config/ | credentials |

| db/ | Database migration files, models |

| lib/<lang>/ | Module scripts, grouped by language, callable via socket.io. See Polyglot Development. |

| src/ | core bot logic |

| logs | Logs from bot for debugging and healthcheck. |

| scripts | The node.js user interface for the lib/ modules. |

| scripts/_init.js | Kicks off AIVA setups after the base Hubot is constructed, before other scripts are lodaded. |

| test | Unit tests; uses Mocha. |

| external-scripts.json | You can load Hubot npm modules by specifying them in here and package.json. |

Setup tips

Docker installation

Docker is a nice way to package and distribute complex modules, it also allows you to develop in safe isolated environment with containerization.

The AIVA Docker image (1Gb) comes ready-to-run for the repo source code, same as if ran locally.

Ubuntu

- Digital Ocean: The easiest, using their Docker 1-click app on Ubuntu. Docker comes installed with it.

- Ubuntu from scratch: See this guide by Digital Ocean.

Then, you need nodejs to run basic setup for entering Docker.

# Nodejs

curl -sL https://deb.nodesource.com/setup_6.x | bash -

sudo apt-get install -y nodejs

If your Digital Ocean instance has insufficient swap memory, boost it:

# Ensure you have enough swap memory. Typically you'd have to run this

# Setting 1Gb swap space.

swapoff -a

sudo dd if=/dev/zero of=/swapfile bs=1024 count=1024k

sudo mkswap /swapfile

sudo swapon /swapfile

swapon -s

Mac OSX

Mac needs a VM driver on top to run Docker. Here’s the complete Docker installation, with nodejs

# Install Homebrew

ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

# Install Cask

brew install caskroom/cask/brew-cask

# Install docker toolbox

brew cask install dockertoolbox

# create the docker machine. Note that 'default' is the vm name we will be using

docker-machine create --driver virtualbox default

# allocate resource for the docker machine when stopped

VBoxManage modifyvm default --cpus 2 --memory 4096

docker-machine start default

# to use vm in terminal

echo 'eval "$(docker-machine env default)"' >> ~/.bash_profile

source ~/.bash_profile

# Nodejs

brew install node

npm update npm -g

Custom deployment

All bot deployment commands are wrapped with npm inside package.json. For more novice users, you can customize the scripts in package.json. For example, changing “aiva” and “aivadev” to your bot name of choice.

Monitoring

AIVA uses [supervisord] with Docker, and forever without Docker. Either way, the bot logs are written to logs/.

Additionally, the non-bot logs are written to /var/log/ for supervisor, nginx and neo4j in Docker.

Dockerization

With some spirit of try-hard devops, we package the Docker image so it follows common deployment practices. Below are the primary processes that are ran in the Docker container, and the relevant config files. They package the original AIVA that is ran on a local machine into Docker.

supervisord, withbin/supervisord.conf: The main entry-point process that runs everything else. Logs output to the main bash session started bynpm startnginx, withbin/nginx.conf: Helps expose the Docker port to the host machine and the outside world. See Docker Port-forwarding for how it’s done.

Helper bash scripts for running Commands;

bin/start.shran bynpm start: start a container inproductionordevelopmentenvironment, with the primary bash session to usesupervisordonly.bin/enter.shran bynpm run enter: start a parallel non-primary bash session to enter the container.bin/stop.shran bynpm stop: stop a container and the bot inside.bin/reset.shran bynpm run reset: stop and remove a container, so you can create a fresh one withnpm startif shit goes wrong.

Docker Port-forwarding

In the uncommon case where you need to expose a port from Docker, there are 4 steps:

- EXPOSE the port and its suggorate in

bin/Dockerfile, then rebuild the Docker image for changes to apply. - define the reverse proxy for the port following the pattern in

bin/nginx.conf - for MacOSX, add the ports for host-container port forwarding by Virtualbox in

bin/start.shaboveVBoxManage controlvm ... - publish the Docker port in

bin/start.shatdocker run ... -p <hostport>:<containerport>

Webhook using ngrok

You don’t need to specify any webhook urls; they are set up automatically in index.js with ngrok. Access the ngrok interface at http://localhost:4040 (production) or http://localhost:4041 (development) after AIVA is run.

Note that for each adapter, if it needs a webhook, you need to specify the PORT and the environment key for the webhook, from config/.

If the webhook environment key is not specified, then ngrok will assign it a random emphemeral web url. This is especially useful if you wish to specify a Heroku url, or a custom ngrok url.

For example, since Facebook takes 10 minutes to update a webhook, we wish to use a persistent webhook url. We leave Telegram to a random url generated by ngrok.

# FB_WEBHOOK is set as https://aivabot.ngrok.com

# TELEGRAM_WEBHOOK is not net

# ... AIVA is run, Telegram is given a random url

# stdout log

[Wed Jun 01 2016 12:02:33 GMT+0000 (UTC)] INFO telegram webhook url: https://ddba2b46.ngrok.io at PORT: 8443

[Wed Jun 01 2016 12:02:33 GMT+0000 (UTC)] INFO Deploy bot: AIVAthebot with adapter: telegram

[Wed Jun 01 2016 12:02:33 GMT+0000 (UTC)] INFO fb webhook url: https://aivadev.ngrok.io at PORT: 8545

[Wed Jun 01 2016 12:02:33 GMT+0000 (UTC)] INFO Deploy bot: aivadev with adapter: fb

SSH Browser-forwarding

If you’re hosting Neo4j on a remote machine and want to access its browser GUI on your local machine, connect to it via

ssh -L 8080:localhost:7474 <remote_host>

Then you can go to http://localhost:8080/ on your local browser.

Human-Turing Machine Interface

We present Human-Turing Machine Interface (HTMI) that is human-bounded Turing complete.

Design

HTMI consists of a human, a Turing Machine, and an interface. The human sends queries and responses to the interface, which maps and canonicalizes them as inputs to the TM. Symmetrically, the TM sends queries and responses to the interface, which verbalizes them as output to the human.

The design outlines of HTMI are as follow:

- approximate human-human interaction

- implement Human-Centered Design (HCD): discoverability (no manual), with affordances, signifiers, mapping

- constraints and forcing functions (HCD)

- feedback (HCD)

- just as we don’t see “human errors” in human-human interaction but to simply ask for clarification, the machine shall do the same. It should treat “human error” as approximation, ask for clarification, and try to complete the action (HCD). Call this “error-resilience”, and the machine reaction as “inquiry”

- long-short term memory (LSTM) on both human and TM

- TM must approximate human behavior and human-like brain function, using Contextual Graph Knowledge Base (CGKB)

Theorem

HTMI is human-bounded Turing complete.

Definition

Human-bounded Turing complete: The class which is the intersection between the Turing-complete class and the class of problems enumerable by humans. The latter class bounds its Turing completeness. Note that it may be the Turing complete class itself, if the latter class is bigger than the former.

Proof

- Let a HTMI be given. A TM is Turing complete. For practical interpretation, TM is equivalent to a pair

{Fn, I}, whereFnis the set of functions invokable by a TM with random access of its informationIon its tape. - The interface takes a human input and maps surjectively into

Fn. If the input cannot be mapped, it is rejected by the interface. - For the mapped

fn ∈ Fn, TM computes usingfn, I. - When the TM halts, the interface passes its output to the human, optionally verbalized.

- The map above is surjective, mapping from the class of problems enumerable by humans into the TM class.

- Since only

fn ∈ Fnare mapped into surjectively and computed, the HTMI class is a subset of the TM class. Since it maps from the class of problems enumerable by humans, the HTMI class is at the intersection between the two classes. Therefore HTMI is human-bounded Turing complete.

Implication

The theorem establishes that HTMI can be used by a human to solve any problems or perform any functions she enumerates that are solvable by a TM. For practical purposes, we focus on decidable problems.

Contextual Graph Knowledge Base

We present Contextual Graph Knowledge Base (CGKB) as the TM memory implementation of HTMI. This shall be consistent with design outlines of HTMI

Design

Recall that TM is equivalent to {Fn, I}, where Fn is the set of functions invokable by a TM with random access of its information I on its tape. CGKB serves as the implementation for I. To also satisfy the approximation of human-human interaction, we have the following design outlines:

graph: just as all enumerable data types, this supports TM completeness. Besides, its generality and features are established mathematically, and this will be easier to use given the connected nature of knowledge.

Fnincludes generic TM functions and graph operations onI, which is information encoded in graph nodes and edges that richly represent properties and relationships.context: the collective term for fundamental information types. To make TM operations tractable, contextualization restricts the scope to a manageable subgraph. The context filters are: privacy (public/private knowledge), entity, ranking, time, constraints, graph properties.

ranking: graph indexing analogous to how humans rank memories, for tractability. Ranking can use emotions (human analogy), LSTM (long-short term memories) for quick graph search.

learning: consistent with the “inquiry” feature of HTMI, CGKB will extend itself by inquiring for missing information.

Terminologies

- auto-planning: the brain can build up and provide plans as causal graph automatically, such as in the simple case of getting a friend’s phone number, or in the more complex case of playing chess. This utilizes all features of the brain enumerated above.

- canonicalized input

<fn, i_p>: wherefnis a TM function, andi_pthe partial information needed forfn’s functional arguments. The output of the interface in HTMI on taking human input, for the TM to use. CGKB will attempt to extract the full informationirequired forfn. - causality: The directed structure of the graph. Used in search, planning, dependencies, chronology, etc.

- cognition: graph operations as

FnonI, to mimic human cognition. - constraint: e.g. existence, soundness.

- function execution

fn(i): Execute TM functionfn ∈ Fnon informationi ∈ Ito yield output - graph: the entire CGKB graph

- norm: preferred defaults to resolve ambiguities.

- plan: The causal graph of the necessary information in

Ito execute inFn. - plan execution: Extraction of information by traversing plan in reverse causal order from leaves to root, using the supplied

i_pand contexts. Returns a subgraph for the extraction ofi

Formal Theory

Definitions

g: The entire graph of CGKBg_i,h: placeholder for any subgraph ofgContextualize: the Contextualize algorithmc_0: the initial context, determined fromfn, i_p<fn, i_p>: the canonicalized input from the interface of HTMI after parsing a human input, wherefnis a TM function, andi_pthe partial information needed forfn’s execution.filter: fields used to filter context, i.e. constrain the expansion of initial context in theContexualizealgorithm; thus far they are{privacy, entity, ranking, time, constraints, graph properties}scope: the lists of fulfilled and unfulfilled information,scope_f, i_urespectively for the extraction ofiforfn(i).c: contextual (knowledge) graph, or the contextualized subgraph, i.e. the outputContextualize(g, c_0, i_p);c ⊂ gi ∈ I: the complete information needed to computefn(i). It is encoded withing; obviously there exists a smallest subgraphh ⊂ gthat sufficiently encodesi. Equivalentlyiis the union of partial informationi_1, i_2, ...k: knowledge, i.e. the complete information extractable fromg. Notei ⊂ k, butfn(i) = fn(k)since the TM functionfnonly computes using the needed information.k_h: the information extractable from subgraphh ⊂ g. Notek = k_g.Ex: the extraction operator to extract knowledge from a graph, e.g.k = Ex(g), k_h = Ex(h)-*->: graph path, or ‘derives’. We sayg_1 -*-> g_2ifg_1is connected tog_2, andk_1 -*-> k_2ifk_1derivesk_2.

Axioms

- Knowledge is encoded in a graph

g, and decoded using theExoperator.- Knowledge is deriverable, and this is reflected in its graph encoding by connectedness. Let

k_1 = Ex(g_1), k_2 = Ex(g_2), ifk_1 derives k_2, i.e.k_1 -*-> k_2, then there must exists a corresponding pathg_1 -*-> g_2, s.t.(k_1 ∪ k_2)is extractable from the connected component CC ofg_1, i.e.(k_1 ∪ k_2) ⊂ Ex(CC(g_1)).- Base knowledge (graph sink) is the most basic knowledge, and is the source of the derivation path. If the path is cyclic, arbitrarily choose the last-encountered node as the basic knowledge. Base knowledge resolves all knowledge along the derivation path by gradual substitutions.

Contextualize algorithm

Let there be given graph g, initial context c_0, partial information i_p.

input: g, c_0, i_p

output: contextual knowledge graph c

enumerate:

- Initialize

scopefromc_0, i_p - while

scopeis not completely fulfilled, do:- BFS expansion on

c_0using the unfulfilled scope,filtersandi_p, and - if context is expanded, update scope, continue;

- else, apply

learningwithinquireto expandg(but not context and scope); then retry on success or break on failure.

- BFS expansion on

- Return the context

c, along with the fulfilled scope for direct access ofi.

We also call the resulting context c the contextual knowledge graph, and the extractable knowledge k_c = Ex(c) the contextual knowledge, obtained by using g, c_0, i_p, filters. Note also fn(i) = fn(k_c), thus the resulting contextual knowledge is sufficient for TM computation.

We prove below that this algorithm yields knowledge that is g-bounded complete; it also proves that the algorithm is correct.

g-bounded Completeness Theorem

Facts

- The initial context

c_0can be disjoint (multiple graph components) - Thus the resulting context

ccan also be a graph with disjoint components, each containing at least a node fromc_0. - The resulting context

cis due to the providedg, c_0, i_p, filters.

Definition

Let there be given canonicalized input <fn, i_p> with TM function fn, partial information i_p, graph g, initial context c_0, and let context c = Contextualize(g, c_0, i_p), and its extracted knowledge k_c = Ex(c). Let k_g = Ex(g) be the complete knowledge extractable from g.

We say

k_cisg-bounded completeifffn(k_c) = fn(k_g).

Lemma

k_gisg-bounded complete.

Proof: fn(k_g) = fn(k_g) by identity. □

Theorem

k_cisg-bounded complete.

Proof: The initial context c_0 is obtained from <fn, i_p>. The scope of the Contextualize algorithm is initialized with all the necessary information needed for the resulting context and fn.

When the algorithm terminates with all the scopes fulfilled, by the axioms, we obtain in c all the necessary basic knowledge to resolve c_0 entirely, thus yielding the necessary and sufficient i for fn(i). The algorithm is correct.

The context c is thus the smallest subgraph in g that encodes the complete information i needed to compute fn(i) = fn(k_c), thus extending the context any further, even to g, does not add to the already-complete i, since we know fn(k_g) = fn(i). Thus, combining the two equalities, we get fn(k_c) = fn(k_g). □

CGKB algorithm

Let there be given the graph g of CGKB, canonicalized input <fn, i_p> from human input parsed by the interface of HTMI, where fn is a TM function and i_p the partial function for executing fn.

input: g, fn, i_p

output: TM output utilizing contextual knowledge fn(i)

enumerate:

- Auto-planning: set

plan = Contextualize(g, fn, i_p). - Contextual knowledge extraction: set

c = Contextualize(g, plan, i_p). - Extract knowledge

k_c = Ex(c)fromc(and itsscope), compute and returnfn(k_c) = fn(i).

fn is used as the initial context for extracting a plan. The plan is used for TM to automatically extract the contextual knowledge needed for computation. This is similar to AI planning, except the plan is already encoded in g when the CGKB learns, thus the planning is automatic.

The context c and its scope are extracted from the plan (or CGKB learns from the human otherwise). We then extract the contextual knowledge, k_c, containing information i ⊂ k_c, for computing and returning fn(k_c) = fn(i).

Proof: To prove that the algorithm is correct, we must show that it is Human-bounded Turing complete, as outlined in HTMI. If so, CGKB can be used by HTMI.

Recall again that TM is equivalent to {Fn, I}. We know that knowledge k or information i ⊂ k is encoded in the graph g of CGKB. For any fn ∈ Fn, we can obtain a sufficient context c such that i ⊂ k_c = Ex(c) for computation fn(i). We show below.

The graph g of CGKB supports TM completeness, and thus without human restrictions, CGKB with TM is TM complete, as spanned by Fn(I).

In HTMI, g is used by queries from a human, which restrict its effective power. Note g is also built upon the TM’s interactions with a human via learning: and thus g of CGKB is Human-bounded Turing complete inductively: at every step for context c ⊂ g, if CGKB can directly answer to a human, then it is already so; otherwise, it learns from the human and extends its g to be so, and it can answer the human with its new knowledge.

Finally, for each fn ∈ Fn and its corresponding context c, the contextual knowledge k_c is g-bounded complete by the theorem above, which implies fn(k_c) = fn(k_g). So, the context c is always sufficient for emulating fn(k_g) for the Human-bounded Turing complete g. Therefore, CGKB is Human-bounded Turing complete. □

Examples

We provide simple examples of CGKB below. The real possibilities are only limited by Human-bounded Turing complete, as proven above. In principle, one can use the brain for any AI tasks, such as playing chess, acting as a generic knowledge base, perform basic cognition, carry out basic functions. Note that in practice, implementation will need to cover more specific details.

when g has learned the knowledge

Given the graph g of CGKB that knows how to call a person,

- Human input:

"Call John." - HTMI interface canonicalize input to:

<fn = call, i_p = "John">, where"John"is tagged asproper nounby a NLP POS Tagger. - Algorithm

CGKB(g, fn = call, i_p = "John"):- Auto-planning:

plan = Contextualize(g, call, "John") = (call)-[requires]->(phone_number)-[requires]->(person) - Contextual knowledge extraction:

c = Contextualize(g, plan, "John") = (me)-[knows]->(John), where the node John contains his phone number. As stated in theContextualizealgorithm, the operation utilizes filters with graph ranking such as emotions, LSTM, time, contraints, or ambiguity resolution, to provide the result context graphc. - Extract knowledge

k_c = Ex(c) = { name: "John", phone: "(234)-567-8900"}, and execut the functioncall("(234)-567-8900").

- Auto-planning:

when g doesn’t have the knowledge

Given the graph g of CGKB that does not know how to call a person,

- Human input:

"Call John." - HTMI interface canonicalize input to:

<fn = call, i_p = "John">, where"John"is tagged asproper nounby a NLP POS Tagger. - Algorithm

CGKB(g, fn = call, i_p = "John"):- Auto-planning:

plan = Contextualize(g, call, "John") = (call)-[requires]->(phone_number). The TM will calllearningto inquire missing information from the human, updateg, and recall the algorithm to yield(call)-[requires]->(phone_number)-[requires]->(person). The rest follows as before. - Contextual knowledge extraction: Suppose the human doesn’t know any “John”; TM will attempt to learn from the human, update CGKB and continue with the task accordingly. This happens for any missing knowledge.

- Auto-planning:

explain the “thought process”

Another powerful feature of CGKB is that a human can inquire about the TM’s thought process, like “explain how do you call a person?”. In this case,

fn = explain_thought_process,i_p = "how do you call a person?"plan = (explain_thought_process)-[require]-(requirements)...c = (call)-[requires]->(phone_number)

And the TM may return fn(k_c) = "(call)-[requires]->(phone_number)" and the response.

Changelog

Check the Github releases.

Legacy Releases

AIVA was known as Jarvis in version 2. It is now deprecated, but if you need to reference stuff from Jarvis, do git checkout tags/v2.0 or checkout the releases.

AIVA v3 was last released at v3.2.1, which was full featured, but quite heavy. We retire it in favor of a lighter, more developer-friendly and extendible version.

Roadmap

- a built in graph brain for ad-hoc knowledge encoding, using CGKB and HTMI

- a built in NLP intent-parsing engine

Contributing

We’d love for you to contribute and make AIVA even better for all developers. We’re mainly interested in something generic and foundational, e.g. adding client for a new language, improving the NLP, adding a useful generic module, adding more adapters like Skype or Twilio.